Major tech companies, including Microsoft, Amazon, and

OpenAI, have reached a landmark international agreement on artificial

intelligence safety.

This agreement, forged on May 21, 2024 at the

Seoul AI Safety Summit, marks a significant step toward ensuring the

responsible development of advanced AI technologies.

Voluntary Safety Commitments

As part of this pact, tech giants from various countries – such as the U.S.,

China, Canada, the U.K., France, South Korea, and the UAE – have committed to

voluntary measures aimed at safeguarding their most advanced AI models. These

commitments build on previous agreements made last November 2023 by companies

developing generative AI software.

Implementation of Safety Frameworks

AI model developers have agreed to publish detailed safety frameworks,

outlining how they will address the challenges posed by their frontier models.

These frameworks will identify “red lines”—specific risks deemed intolerable,

including automated cyberattacks and the potential use of AI in creating

bioweapons.

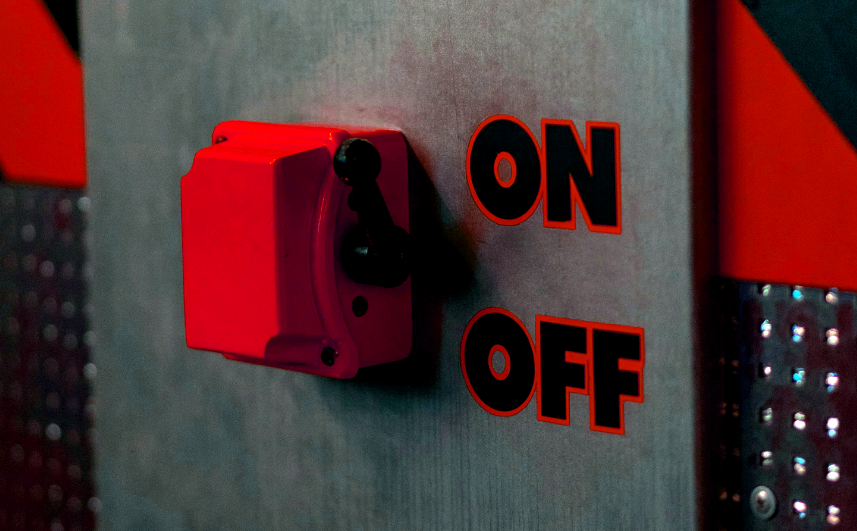

The AI Kill Switch

To tackle such extreme threats, the above-mentioned companies plan to implement an AI “kill

switch”.

An AI kill switch is a safety mechanism designed to immediately halt the

operation of an artificial intelligence system if it exhibits dangerous or

unmanageable behavior. This concept is crucial for preventing potential

risks that advanced AI models might pose, such as autonomous actions that

could harm individuals or society.

The kill switch can be triggered manually

by human operators or automatically by the AI system itself if it detects

that it is operating outside safe parameters. This ensures that AI systems

remain under human control and can be deactivated if they begin to behave

unpredictably or maliciously, thus mitigating risks associated with advanced

AI technologies.

The implementation of an AI kill switch is part of a broader commitment to

AI safety and risk mitigation by major tech companies. As AI systems become

more sophisticated and integral to various aspects of daily life, the

potential for misuse or unintended consequences increases.

These measures

are designed to provide an additional layer of security, ensuring that AI

development progresses responsibly and aligns with ethical standards. By

incorporating kill switches, tech giants aim to build public trust in AI

technologies and ensure that they can be safely managed and controlled.

Global Collaboration

The agreement’s global nature is unprecedented, with leading AI companies from

diverse regions committing to the same safety standards. U.K. Prime Minister

Rishi Sunak highlighted the importance of this cooperation, stating, “It’s a

world first to have so many leading AI companies from so many different parts

of the globe all agreeing to the same commitments on AI safety. These

commitments ensure the world’s leading AI companies will provide transparency

and accountability on their plans to develop safe AI.”

Expansion of Previous Commitments

The new pact expands on earlier commitments made in November, with companies

now agreeing to seek input from “trusted actors,” including government bodies,

before releasing their safety frameworks. These guidelines will be published

ahead of the next AI summit, the AI Action Summit in France, scheduled for

early 2025.

Focus on Frontier Models

The commitments specifically target “frontier models,” the cutting-edge

technologies behind generative AI systems like OpenAI’s GPT series, which

powers the widely-used ChatGPT. Since its launch in November 2022, ChatGPT has

highlighted both the potential and the risks of advanced AI systems, prompting

increased scrutiny from regulators and industry leaders.

Regulatory Approaches

Different regions are taking varied approaches to AI regulation. The European

Union has recently approved the AI Act, aiming to regulate AI development

comprehensively. In contrast, the U.K. has opted for a “light-touch”

regulatory approach, applying existing laws to AI technology. While the U.K.

government has considered legislating for frontier models, no formal timeline

has been established.

Ensuring Safe AI Development

The introduction of the AI kill switch is a crucial step in ensuring that AI

technologies are developed safely and ethically. By committing to these safety

measures, tech giants aim to build public trust and mitigate the risks

associated with AI advancements. This collective effort reflects a growing

recognition of the need for responsible AI development to harness the

technology’s benefits while minimizing potential harms.